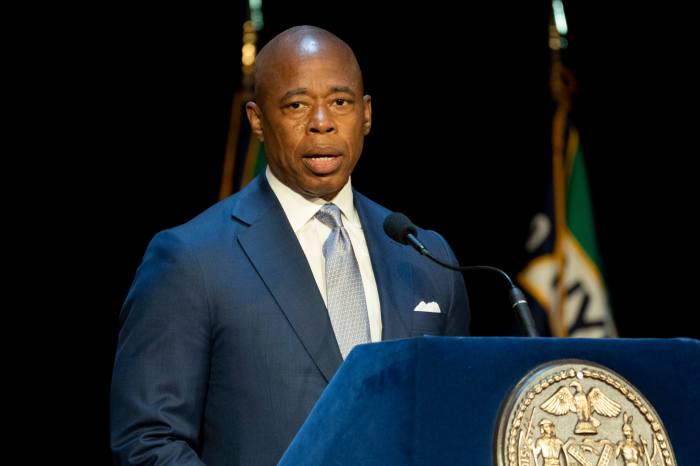

Can a mathematical formula predict a criminal defendant’s likelihood of jumping bail or re-offending?

Itmay seem like something out of “Minority Report”but some states are already using such methods to determine bail, release conditions and even the probationary status of certain prisoners. In Massachusetts, members of a state criminal justice group reviewing the state’s criminal justice system said this week they could see a similar system working in the Bay State — at least in regard to determining pretrial bail conditions, according to a State House News report. Those remarks came after members of the Council of State Governments Justice Center reportedly presented findings to that group Wednesday indicating certain pretrial decisions in state courts “are not informed by individualized, objective, research-driven assessment of risk of flight or pretrial misconduct.” The algorithmic tools analyze such indicators as criminal history, the nature of the pending charges, employment and residence history, and whether the defendant has any mental health or addiction issues, when determining the general risk factor of any defendant, according to the Justice Center. The hope is that such automated systems would remove, or at least mitigate, much of the subjective decision-making inherent in judicial rulings, while providing an objective, data-driven analysis of how likely the defendant is to re-offend while out on bail. In theory, the tools would help judges check their own biases while assisting with jail costs and overcrowding. But a number of studies show that the algorithms have the potential to reinforce existing socioeconomic and racial problems in the court system. There are about 60 different kinds of algorithms with varying approaches employed by jurisdictions throughout the country, according to the Associated Press, complicating efforts to verify their accuracy. Still, some troubling examples have emerged. A report released earlier this year by the journalism nonprofit ProPublica analyzed an algorithm developed by Northpointe, a software company whose model is among the most widely used assessment tools in the country. In one Florida jurisdiction employing the model, the group’s report found that only 20 percent of those predicted to commit violent crimes went on to do so, and that black defendants were still 77 percent more likely to be identified as a “high risk” to commit a future violent crime while 45 percent were predicted to be more likely to commit a future crime of any kind. Later, Northpointe released limited information to the organization, revealing that factors like education level, whether the defendant was unemployed, and if their parents were divorced when they were young children, were all considered when ranking an individual’s risk. “Is it fair to make decisions in an individual case based on what similar offenders have done in the past? Is it acceptable to use characteristics that might be associated with race or socioeconomic status, such as the criminal record of a person’s parents?” A report from the criminal justice nonprofit The Marshall Project reads.

State explores letting numbers determine risk of defendants jumping bail or re-offending

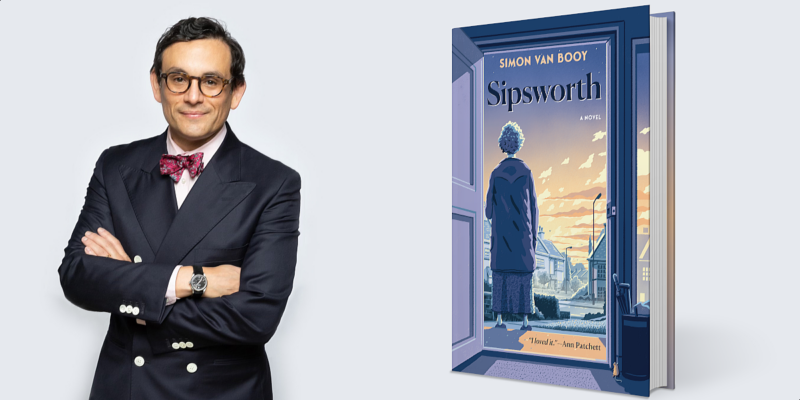

Derek Kouyoumjian/Metro