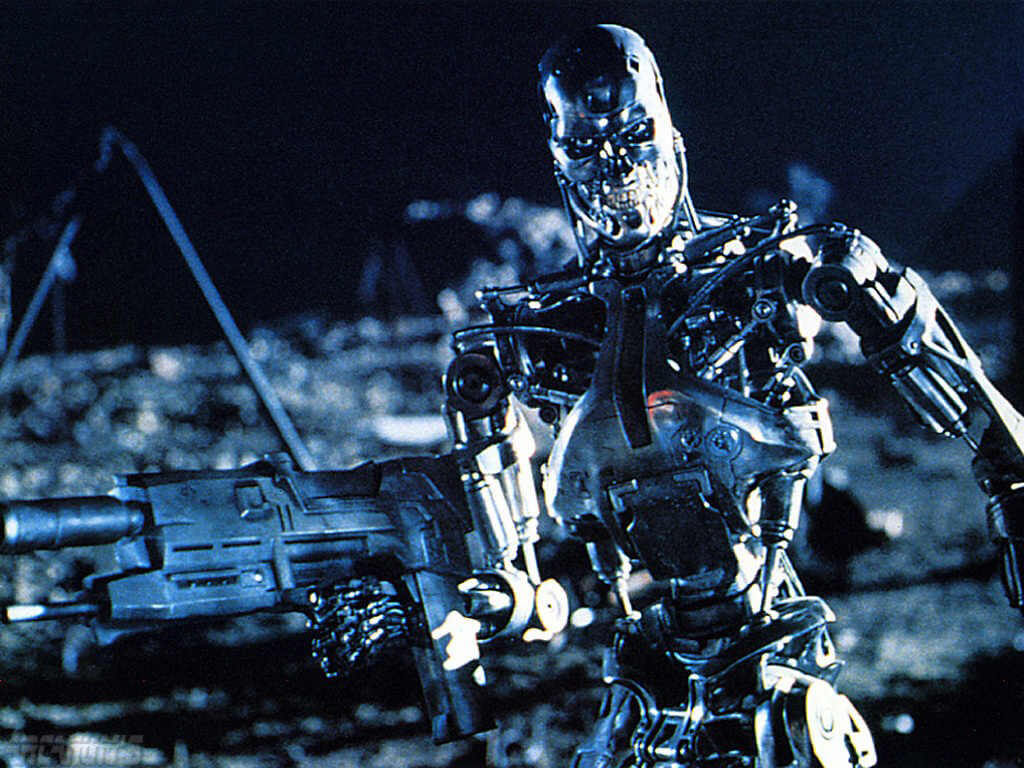

Killer robots “Terminator”-style is not just the nightmarish scenario from sci-fi movie fantasy: the prospect of lethal machines obliterating mankind is under discussion at a major UN multilateral meeting in Geneva. The week-long session on “lethal autonomous weapons systems” (LAWS), attended by 117 UN members, is looking at the rationale of giving machines the freedom to locate and kill enemies without human intervention. Meanwhile, campaign group Human Rights Watch has issued a report calling for a worldwide ban of such unmanned weapon systems before they are ever built. “The lack of meaningful human control would make it difficult to hold anyone criminally liable,” Mary Wareham, coordinator of the ‘Campaign to Stop Killer Robots’ initiative at Human Rights Watch, told Metro. Killer robots – what’s all the fuss about?

– There are serious ethical, legal, technical and operational concerns at the prospect of weapons systems that would select and attack targets without further human intervention. What’s paramount is an objection to giving a machine the power to – on its own – take a human life on the battlefield or in law enforcement and other situations. Other potential threats include the prospect of an arms race and proliferation to armed forces with little regard for the law. What other drawbacks are there?

Fully autonomous weapons would be prone to many possible failures including malfunctions, communications degradation, software coding errors, enemy cyber attacks or infiltration into the industrial supply chain, jamming, spoofing, decoys, other enemy countermeasures or actions. Would such machine be truly autonomous, completely free-thinking?

– Once deployed, they would be able to select and fire on targets without meaningful human control. Although they do not yet exist yet, the development of precursors and military planning documents indicate that technology is moving rapidly in that direction. Semi-autonomous weapons, such as existing armed drones, are remote-controlled by human operators who take the decision to select and fire on targets. If we build the bots correctly, surely all of their operations would be ‘lawful’, right?

– There are grave doubts that fully autonomous weapons could ever replicate human judgment and comply with the legal requirement to distinguish civilian from military targets. They would be prone to cause civilian casualties in violation of international humanitarian law. The lack of meaningful human control that characterizes the weapons would make it difficult to hold anyone criminally liable for such unlawful actions. What could be argued as beneficial from these machines?

– Some contend that these weapons would reduce the risks to soldiers and could increase accuracy of attacks and speed of response. They argue fully autonomous weapons’ lack of human emotions could have military and humanitarian benefits as they would be immune from factors, such as fear, anger, pain, and hunger, elements that can cloud judgment, distract humans from their military missions, or lead to attacks on civilians. However, humans possess empathy and compassion and are generally reluctant to take the life of another human. What’s your proposal?

– Human Rights Watch is a co-founder of the Campaign to Stop Killer Robots, a global coalition of non-governmental organizations that calls for a preemptive ban on the development, production, and use of fully autonomous weapons systems. The current multilateral talks on the matter should move urgently to negotiate a new international treaty enshrining the principle of meaningful human control of weapons.

OMG: Expert weighs in on idea of ‘killer robots’ in military

YouTube